The NOODL license: Licensing African datasets to support research and AI in the Global South

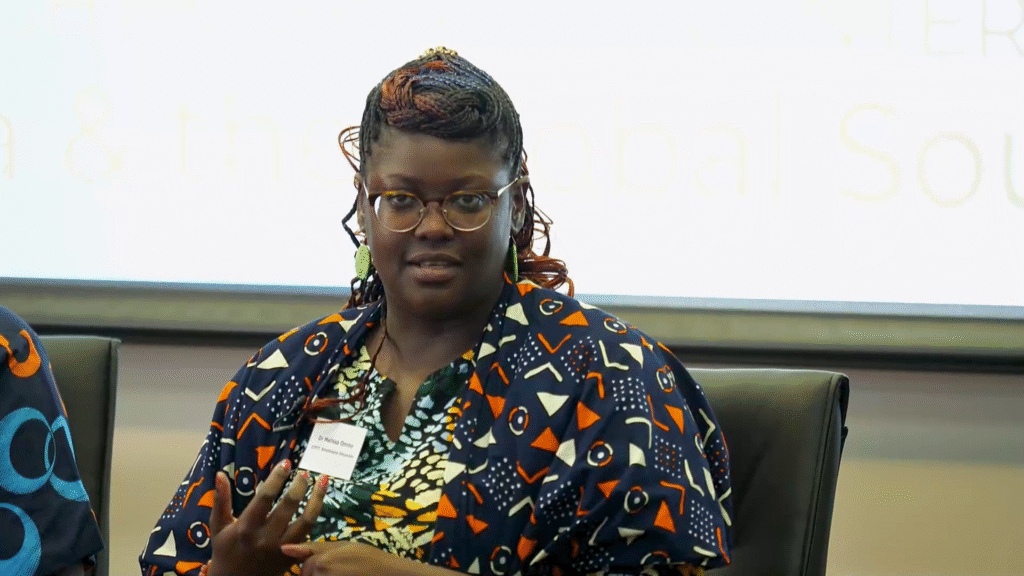

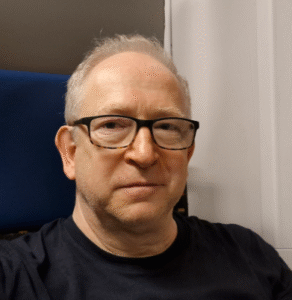

With the increasing prominence of AI in all sectors of our economy and society, access to training data has become an important topic for practitioners and policy makers. In the Global North, a small number of large corporations with deep pockets have gained a head start in AI development, using training data from all over the world. But what about the creators and the communities whose creative works and languages are being used to train AI models? Shouldn’t they also derive some benefit? And what about AI developers in Africa and the Global South, who often struggle to gain access to training data? In an effort to try to level the playing field and ensure that AI supports the public interest, legal experts and practitioners in the Global South are developing new tools and protocols which aim to tackle these questions. One approach is to come up with new licenses for datasets. In a pathbreaking initiative, lawyers at the University of Strathmore in Nairobi have teamed up with their counterparts at the University of Pretoria to develop the NOODL license. NOODL is a tiered license, building on Creative Commons, but with preferential terms for developers in Africa and the Global South. It also opens the door for recognition and a flow of benefits to creators and communities. NOODL was inspired by researchers using African language works to develop Natural Language Processing systems, for purposes such as translation and language preservation. In this presentation, Dr Melissa Omino, the Head of the Centre for Intellectual Property and Information Technology Law (CIPIT) at Strathmore University in Nairobi, Kenya, talks about the NOODL license. This presentation was originally delivered at the Conference on Copyright and the Public Interest in Africa and the Global South, in Johannesburg in February 2025. The full video of the presentation is available here. Licensing African Datasets to ensure support for Research and AI in the Global South Dr Melissa Omino Introduction [Ben Cashdan]: We have Dr. Melissa Omino from CIPIT at the University of Strathmore in Nairobi to talk a little bit about a piece of work that they’re doing to try and ensure that the doors are not closed, that there is some opportunity to go on doing AI, doing research in Africa, but not necessarily throwing the doors open to everybody to do everything with all our stuff. Tell us a little bit about that. [Dr Melissa Omino] Well, I really like that introduction. Yes, and that was the thinking behind it. Also, it’s interesting that I’m sitting next to Vukosi [Marivate, Professor of Computer Science at University of Pretoria] because Vukosi has a great influence on why the license exists. You’ve heard him talking about Masakhane and the language data that they needed. In the previous ReCreate conference, where we talked about the JW300 dataset, I hope you all know about that. If you don’t know, this is a plug for the ReCreate YouTube channel so that you can go and look at that story. That’s a Masakhane story. Background: The JW300 Dataset To make sure that we’re all together in the room, I’ll give you a short synopsis about the JW300 dataset. Vukosi, you can jump in if I get something wrong. Essentially, Masakhane, as a group of African AI developers, were conducting text data mining online for African languages so that they could build AI tools that solve African problems. We just had a wonderful example right now about the weather in Zulu, things like that. That’s what they wanted to cater for and the solutions they wanted to create. They went ahead and found [that there are] very minimal datasets or data available online for African problem solving, basically in African languages. But they did find one useful resource, which was on the Jehovah’s Witness website, where it had a lot of African languages because they had translated the Bible into different African languages. They were utilizing this in what was called the JW300 dataset. However, somehow, I don’t know how, you guys thought about copyright. They thought about copyright after text data mining. They thought, hey, can you actually use this dataset? That’s how they approached it. The first thing we did was look at the website. Copyright notices excluding text and data mining Most websites have a copyright notice, and a copyright notice lets you know what you can and can’t do with the copyright material that is presented on the website. The copyright notice on the Jehovah’s Witness website specifically excluded text data mining for the data that was there. We went back to Masakhane and said, sorry, you can’t use all this great work that you’ve collected. You can’t use it because it belongs to Jehovah’s Witness, and Jehovah’s Witness is an American company registered in Pennsylvania. They asked us, how is it that this is African languages from different parts of Africa, and the copyright belongs to an American company, and we cannot use the language? I said, well, that’s how the law works. And so they abandoned the JW300 dataset. This created a new avenue of research because Masakhane did not give up. They became innovative and decided to collect their own language datasets. And not only is Masakhane doing this, Kencorpus is also doing this by collecting their own language datasets. Building a Corpus of African Language Data But where do you get African languages from? People. You go to the people to collect the language, right? If you’re lucky, you can find a text that has the language, but not all African languages will have the text. Your first source would be the communities that speak the African languages, right? So you’re funded because collecting language is expensive – Vukosi can confirm. He’s collecting 3,000 languages or 3,000 hours of languages. His budget is crazy to collect that. So you collect the language. You go to the community, record them however you want to do that. Copyright experts will tell you the minute you